Gradient Based Optimization#

Problem description:#

We have a TLE at a given time, which we call TLE\(_{0}\), and we look for a TLE at a future observation time (\(t_{obs}\)): TLE\(_{t}\).

We can propagate the state from \(t_0 \rightarrow t_{obs}\), and obtain the state at \(t_{obs}\). In general, we define the state (i.e., position and velocity), as:

We then have: TLE\(_0\), \(\vec{x}(t_0)\), and \(\vec{x}(t_{obs})\), but we want to find TLE\(_{obs}\). That is, the TLE at the observation time, that when propagated with SGP4 at its time, it corresponds to that \(\vec{x}(t_{obs})\). In general, this means that we are able to invert from the state to the TLE, at any given time.

In order to do this, we formulate the problem as looking for the minimum of a function of a free variables vector (i.e., \(\vec{y}\)) \(F(\vec{y})\), where this function defines the difference between the given state propagated from TLE\(_0\) at \(t_{obs}\), and the state generated from the free variables that make a TLE which is then propagated at its current time: TLE\((\vec{y})(t_{0}\rightarrow t_{obs})\). So we can reformulate the problem as:

We can do this via Newton method, by updating an initial guess \(y_{0}\) until convergence. Where the update is done as follows:

with \(DF\) the Jacobian of \(F\) with respect to \(y_k\). We can easily see that this Jacobian is made of the following elements:

where \(\tilde{x}_{i} \in [\tilde{x}_1,\tilde{x}_2,\tilde{x}_3,\tilde{x}_4,\tilde{x}_5,\tilde{x}_6]=[\tilde{x},\tilde{y},\tilde{z},\tilde{\dot{x}},\tilde{\dot{y}},\tilde{\dot{z}}]\); and \(y_i \in [no_{kozai}, ecco, inclo, mo, argpo, nodeo, n_{dot},n_{ddot},B^*]\).

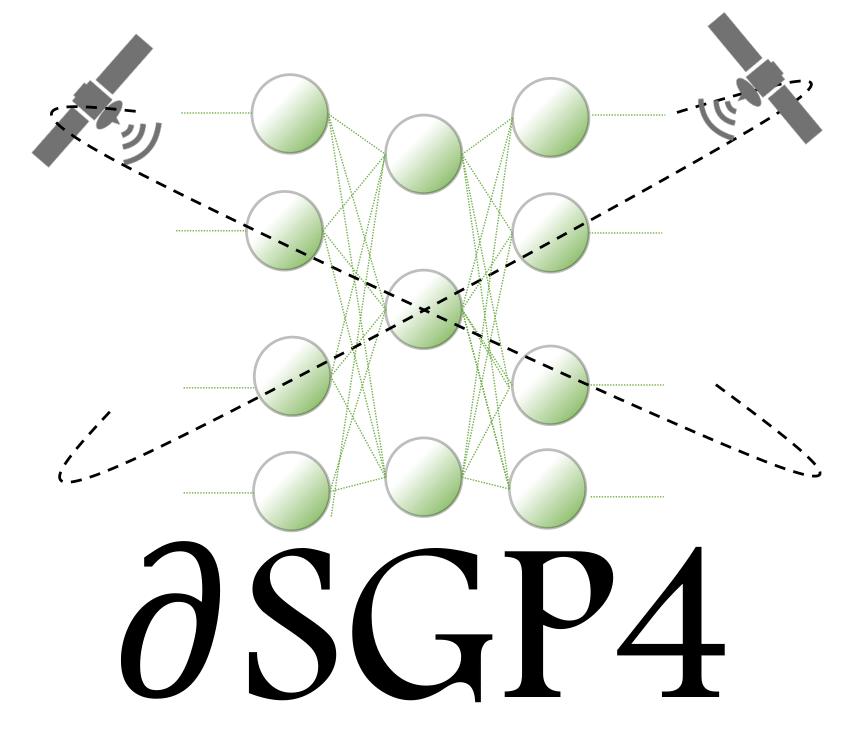

Since we built a differentiable SGP4, we can compute the gradient of the state w.r.t. the TLE inputs quite easily. Furthermore, the initial guess (\(y_{0}\)) will be found by the simple inversion from Cartesian to Keplerian, which does not correctly invert from state to TLE, but it is good as initial approximation.

import dsgp4

tles=dsgp4.tle.load(file_name="example.tle")

#we initialize the TLEs

dsgp4.initialize_tle(tles);

#we extract one:

my_tle=tles[0]

#when I propagate to zero, I expect the returned TLE to be identical to my_tle:

found_tle, y=dsgp4.newton_method(tle0=my_tle,time_mjd=my_tle.date_mjd,new_tol=1e-12,max_iter=10,verbose=True)

print(my_tle,found_tle)

Solution F(y) = 1.891438239197415e-14, converged in 5 iterations

TLE(

0 COSMOS 2251 DEB

1 34454U 93036SX 22068.91971155 .00000319 00000-0 11812-3 0 9996

2 34454 74.0583 280.7094 0037596 327.9100 31.9764 14.35844873683320

) TLE(

1 34454U 93036SX 22068.91971967 .00000319 00000-0 11812-3 0 9997

2 34454 74.0583 280.7094 0037596 327.9100 31.9764 14.35844873683320

)

#I now propagate until 1000 minutes after

found_tle, y=dsgp4.newton_method(tle0=my_tle,

time_mjd=my_tle.date_mjd+800.,

new_tol=1e-12,

max_iter=10,

verbose=True)

#Newton still converges, and the TLE is of course now different:

print(my_tle, found_tle)

Solution not found, returning best found so far

F(y): 1.364e-12

TLE(

0 COSMOS 2251 DEB

1 34454U 93036SX 22068.91971155 .00000319 00000-0 11812-3 0 9996

2 34454 74.0583 280.7094 0037596 327.9100 31.9764 14.35844873683320

) TLE(

1 34454U 93036SX 24138.91971967 .00000319 00000-0 11812-3 0 9997

2 34454 74.0583 254.2494 0037442 103.1744 22.5962 14.36399602683320

)